AI bias in education systems is a pressing concern that has emerged as technology increasingly shapes the learning landscape. As artificial intelligence becomes integral in educational tools and assessments, understanding how bias infiltrates these systems is crucial. This exploration reveals how AI bias manifests, affecting student experiences and outcomes in ways that often go unnoticed.

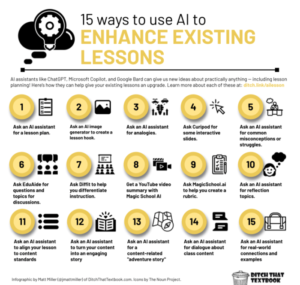

From automated grading systems to personalized learning platforms, AI tools have the potential to enhance education. However, they also risk perpetuating existing inequalities if not carefully scrutinized. By examining examples of AI bias in educational technologies and the specific impacts on diverse student demographics, we can better comprehend the challenges and opportunities that lie ahead.

Understanding AI Bias in Education

AI bias refers to the systematic favoritism or discrimination that emerges from artificial intelligence systems, often rooted in the data and algorithms that underpin these technologies. In educational contexts, AI can be harnessed for various purposes, such as personalized learning, grading, and student assessment. However, when biases seep into these processes, they can significantly impact student experiences and outcomes.The manifestation of AI bias in education systems can occur in several forms, including algorithmic bias, data bias, and reinforcement of existing disparities.

Algorithmic bias occurs when the algorithms used in educational tools reflect societal inequalities or prejudices. Data bias arises when the training data used to build AI systems contains historical biases or is not representative of the diverse student population. The consequences of such biases can lead to unfair assessments, misinterpretation of student capabilities, and a lack of access to essential resources for marginalized groups.

Impact of AI Bias on Student Learning and Outcomes

The effects of AI bias on student learning and overall educational outcomes can be profound and detrimental. When biases infiltrate educational technologies, they can result in a range of negative implications that hinder equitable learning opportunities. For instance, some AI-powered tutoring systems may provide more assistance or recognition to students from certain demographic backgrounds while neglecting others. This can create an environment where some students are consistently favored over their peers, leading to a widening achievement gap.

In addition, biased grading systems can unfairly penalize students based on their race, gender, or socioeconomic status. This can have serious repercussions on students’ mental health and self-esteem, potentially discouraging them from pursuing their academic goals.Several examples exemplify the prevalence of AI bias in educational technology tools:

- Admissions Algorithms: Some universities utilize AI to predict student success based on past data. If this data reflects historical biases, it can unfairly disadvantage certain groups of applicants.

- Automated Essay Scoring: AI systems designed to evaluate student writing may favor certain linguistic styles or cultural expressions over others, leading to biased assessments.

- Learning Analytics Tools: These tools track student performance and engagement. If they are programmed based on biased assumptions, they can misinterpret a student’s potential or need for support.

The integration of AI in education holds promise for enhancing learning experiences, but it is critical to recognize and address the biases that can arise within these systems. By doing so, educators and policymakers can work towards ensuring a more equitable educational landscape for all students.

Understanding and mitigating AI bias is essential in fostering an inclusive educational environment where every student has the opportunity to thrive.

Addressing AI Bias in Educational Policies

![Glossary of AI Risk Terminology and common AI terms [AI Impacts Wiki] Glossary of AI Risk Terminology and common AI terms [AI Impacts Wiki]](https://hbhotch.info/wp-content/uploads/2025/10/abstract-ad-ai-artificial-intelligence-wallpaper-preview.jpg)

In today’s rapidly evolving educational landscape, integrating AI technologies brings both opportunities and challenges. One of the most pressing issues is the bias that can emerge from these systems, impacting fairness and equity in educational experiences. Developing robust policies to address AI bias is essential for creating inclusive educational environments where all students can thrive.Creating policies that mitigate AI bias in education involves several critical steps.

First, it is vital to conduct thorough audits of existing AI tools to identify any biases that may affect student outcomes. This can be complemented by engaging diverse stakeholder groups in the policy-making process, ensuring that multiple perspectives inform the creation of these policies. Training educators on the principles of equity and fairness in AI use is also crucial, empowering them to make informed decisions when implementing AI tools in their classrooms.

Steps for Creating Effective AI Bias Policies

Establishing effective policies begins with a clear understanding of the challenges posed by AI bias. The following steps Artikel a comprehensive approach to policy creation:

- Conduct a comprehensive analysis of AI tools to identify potential biases affecting various demographics.

- Incorporate diverse stakeholder feedback, including students, parents, educators, and community members, in developing policies.

- Implement training programs for educators on identifying and mitigating AI bias, emphasizing ethical AI usage.

- Regularly review and update policies to adapt to new findings and technological advancements in AI.

- Establish clear accountability measures to ensure compliance with anti-bias policies among educational institutions.

Equitable use of AI tools in education requires educators to adopt best practices that prioritize fairness and inclusion. By following these guidelines, educators can help ensure that AI serves as a positive force in learning environments.

Best Practices for Educators

The integration of AI tools in classrooms can be beneficial when approached thoughtfully. Here are key practices for educators to follow:

- Foster a culture of awareness around AI bias and its impact on student learning and evaluation.

- Utilize AI tools that have been validated for fairness across different student demographics, ensuring equitable outcomes.

- Encourage student feedback on AI usage, creating a loop for continuous improvement and responsiveness to student needs.

- Collaborate with peers to share insights and experiences regarding the use of AI tools, promoting a community of practice focused on equity.

- Engage in ongoing professional development opportunities to remain informed about advances in AI and its implications for education.

The role of stakeholders in addressing AI bias cannot be overstated. It requires a collective effort from various parties to create a fair educational landscape.

Stakeholder Engagement in AI Bias Mitigation

Effective mitigation of AI bias in educational institutions relies heavily on the collaboration of stakeholders. This includes educators, administrators, policymakers, parents, and students. Each group plays a vital role in fostering an environment that recognizes and addresses bias.

- Educators should advocate for the inclusion of bias mitigation strategies in AI tool development and deployment.

- Administrators must prioritize funding and resources towards training and tools that promote equity in AI use.

- Policymakers are responsible for enacting laws and regulations that enforce anti-bias measures in educational AI applications.

- Parents and community members can voice concerns and contribute their insights to ensure that AI tools reflect the diverse needs of students.

- Students should be seen as active participants in discussions about AI, providing valuable feedback on the tools they use.

Collaboration among stakeholders is essential for creating a balanced approach to AI in education, ensuring that all voices are heard and considered.

AI Bias and Its Societal Implications

AI bias in education systems has far-reaching effects that extend beyond individual learners to impact entire communities. As AI technologies become increasingly integrated into educational policies and practices, it is essential to understand how these biases manifest and affect diverse demographic groups. Different students experience varying levels of impact based on their race, gender, socio-economic status, and other factors, leading to significant disparities in educational opportunities and outcomes.The pernicious nature of AI bias can serve to entrench existing inequalities in education.

When algorithms are trained on biased data, they often produce biased outcomes, reinforcing stereotypes and limiting access for marginalized groups. For instance, an AI assessment tool may unfairly disadvantage students from lower socio-economic backgrounds due to a lack of access to technology or educational resources. As a result, these biases can perpetuate the cycle of disadvantage, hindering social mobility and educational equity.

Effects of AI Bias in Education Across Demographic Groups

AI bias can disproportionately affect various demographic groups, leading to significant inequalities in educational outcomes. Understanding the nuances of these effects is vital for educators and policymakers. The following points highlight how different demographics can be impacted:

- Students of color may face lower assessment scores due to biased algorithms that fail to account for cultural context, leading to potential misjudgment of their abilities.

- Gender biases in AI tools can marginalize female students, particularly in STEM fields, where historical data may underrepresent their achievements.

- Students with disabilities may encounter AI systems that inadequately address their unique learning needs, resulting in unfair evaluations and limited support.

- Socio-economic disparities can widen as AI systems favor students with more resources, leaving economically disadvantaged students at a disadvantage in competitive assessments.

Framework for Recognizing and Counteracting Biases in AI-Driven Assessments

Creating a framework to recognize and counteract biases in AI-driven assessments is crucial for ensuring equitable educational practices. Educators can implement the following strategies to actively address AI bias:

- Regular Audits: Conduct periodic evaluations of AI tools to identify and rectify biases in the algorithms and the data they utilize.

- Inclusive Data Sets: Use diverse and representative data sets in training AI systems to minimize bias and ensure that all demographic groups are accurately represented.

- Transparency: Ensure that educators and stakeholders understand how AI tools make decisions, promoting an environment of accountability and trust.

- Professional Development: Provide ongoing training for educators on recognizing bias in AI and its impacts on student assessment, helping them to critically assess AI-driven tools.

- Feedback Mechanisms: Establish channels through which students and parents can provide feedback on AI-driven assessments, helping to identify and address bias in real-time.

“AI bias is not just a technical issue; it is a societal challenge that requires collective action from all stakeholders in education.”

Last Word

In summary, recognizing and addressing AI bias in education systems is essential for fostering equitable learning environments. As we navigate the complexities of integrating AI into education, it is vital for educators, policymakers, and stakeholders to collaborate in creating inclusive strategies. Ultimately, mitigating AI bias not only benefits individual learners but also promotes a fairer educational landscape for all.

FAQ Compilation

What is AI bias in education?

AI bias in education refers to the systematic favoritism or discrimination embedded in AI algorithms that can affect student learning and outcomes.

How does AI bias impact students?

AI bias can lead to unequal learning opportunities, reinforce stereotypes, and negatively influence assessment results for certain demographic groups.

What are common examples of AI bias in educational tools?

Common examples include biased grading algorithms, flawed recommendation systems in learning platforms, and inequitable access to tailored educational resources.

How can educators address AI bias?

Educators can address AI bias by critically evaluating the tools they use, advocating for inclusive policies, and promoting awareness of the potential biases within AI systems.

What role do stakeholders play in mitigating AI bias?

Stakeholders, including policymakers, educators, and technology developers, must work together to implement guidelines and best practices that reduce AI bias in education.